New and Improved in UE4.27

For many virtual productions, the Unreal Engine is at the core of the technical pipeline. This is especially true of LED volume stages. Epic Games’ in UE4.27 has dramatically improved a number of key workflows, we spoke to Miles Perkins, Industry Manager, Film & Television at Epic Games about how these will affect crews on set and the technical pipeline of an LED stage.

LED Stage in-camera VFX workflows

The 4.27 release introduces a set of improvements to the efficiency, quality, and ease of use of Unreal Engine’s in-camera visual effects (ICVFX) toolset. In particular, it includes a major refactoring of the nDisplay code and faster light baking. These make it faster and more reliable to utilize UE4 in a virtual production pipeline and normalizes the role of the VP UE4 team on set as being able to function much more like any other department in the filmmaking process.

The new significant VP improvements include

-

- Switchboard

- Improved nDisplay set up

- Remote

- Level Snapshot

- the Stage Monitor

- Faster light baking with GPU Lightmass

There is also OCIO support and other render improvements such as motion blur.

SwitchBoard

Stage operability is a primary focus of the 4.27 release. The new Switchboard tool is a key part of that focus and it is the interface for controlling all the stage machines from a single machine. “If you’re onstage, typically there are multiple people who are doing multiple things. In a multi-user session, everybody’s responsible for their machines and getting them up, where the data is, etc, and it can be overwhelming,” says Perkins. What Epic’s engineers came up with, he explains, were new tools to streamline these processes on set. “Now it can really all be handled by one person who is the ‘volume control’. Switchboard has the ability to launch all the machines, understand the status that they’re in, and make sure that they’re ready to shoot.”

On a stage previously, a production could require quite a sizable team to look after the machines handling the camera tracking, the control and monitoring of all the render nodes, and coordinating the data that is coming off various machines in a synchronized fashion. “Now we are seeing this move from multiple people in the control area to having just say two people,” Perkins points out. “Getting this to work well was something that was really important to us.”

nDisplay Setup

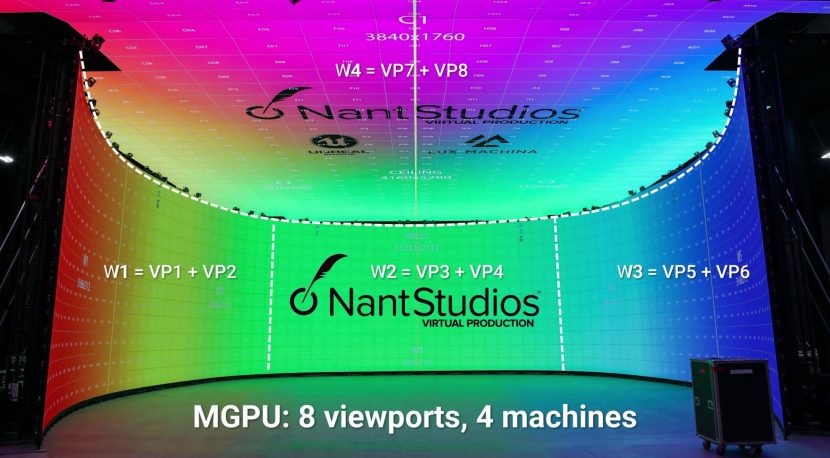

In 4.27 there is a new 3D Config Editor which allows teams to setup nDisplay for LED volumes or indeed any other multi-display rendering applications. All nDisplay-related features and settings are now combined into a single nDisplay Root Actor for easier access, and the addition of multi-GPU support now enables nDisplay to scale more efficiently. Multi-GPU support also enables users to maximize resolution on wide shots by dedicating a GPU for in-camera pixels, and to shoot with multiple cameras, each with its own uniquely tracked frustum.

“The overscan is really important and related to the multi-GPU support, in the past with the single GPU approach, basically every GPU was rendering your inner frustum, and part of the outer frustum,” explains Perkins. Now in UE 4.27, one can allocate just the inner frustum to a GPU. “So this is going to allow you to scale up, you can overscan if you need to and you can modify that.” This allows users to get a lot more performance dedicated to the critical into the inner frustum than before. “For example, say you’re shooting and you have a bloom or something like that with your lens. Well, now you can overscan this, which will make it really big, and thus a more accurate bloom.” This is key as in such examples, a light that is impacting the lens is not going to be included in the render in a way that accurately allows the light to bloom.

The production-ready C++ BP Root Actor also consolidates all nDisplay-related features and topics under one roof: from the configuration file and in-camera VFX, to previewing your display cluster in Unreal Engine. “This aspect is system-wide and for example, it would show that there could potentially be a moiré issue, that you would not see while standing on the stage. It would show ‘Hey, this camera got within a certain distance from the wall and there could potentially be a problem here’, for example, explains Perkins. “Consolidating into that single actor for a simpler workflow is important, so before you even get to the stage, you can make sure everything is correct, and start to run through things to understand what the stage is going to do.”

For post & VFX teams, there’s a visualizer for nDisplay. There is post-process support without blueprints, so that when the work gets into post-production, the team can make sure that any workflows one has are consistent with what the team saw on the stage. “There’s an apples-to-apples comparison when you get into post, – if you have to get into post for something,” he adds.

Level Snapshot

On set, it is often critical to know exactly how a stage was set and be able to come back to the exact iteration of lighting and controls, sometimes days or even weeks later. The new Level Snapshots let users easily save the state of a given scene and later restore any or all of its elements, making it simple to return to a previous setup for pickup shots or for creative iterations/reshoots.

Level Snapshots also provides robust control over the snapshot restore process, enabling the user to pick and choose what to restore from a snapshot if he/she does not want or need to bring back absolutely everything. On stage during a shoot, Level Snapshots are designed to help the stage operator accommodate the types of complex, off-the-cuff restore requests that filmmakers may come up with, for example, the director saying: “let’s go back to take 6 and move back all the trees except for these five trees.” Level Snapshots were previously a beta feature of 4.26 and they have proven to be popular.

Remote

Being able to remotely control the UE4 engine in a live environment is now easier with the improvements in 4.27. There have been several improvements that allow users to control more properties and functions, and to replicate them properly.

Controlling a virtual production stage now allows for the ability to expose properties that can now be controlled by OSC, DMX, MIDI, and C++, along with hardware control surfaces. With the 4.27 release, Epic allows the ability to control any Remote Control Preset with the protocol of user’s choice. This gives virtual production and live events stage operators the ability to use many hardware and software solutions to drive the action in real-time.

Each property can be bound to OSC, Midi, or DMX channels. Users can set inputs and ranges for each value, presets can be saved and recalled easily.

Stage Monitor

Stage monitor is exactly that, it monitors the stage for any issues during filming. For example, “it would flag if there was frame glitch or something like that, that isn’t noticeable by eye,” says Perkins. “It will record that, and it will let you know at the end of the take, ‘Hey, there were some issues that you might want to pay attention to’.” Stage Monitor allows this to be further broken down into what was happening with each one of the machines, to help isolate a problem that might be an issue with a particular machine on set. “It will have a log so that you clearly know what’s going on, in any take,” he adds. “It can be hard to notice if something drops to 23 fps momentarily when you are running at 24 frames per second, but you need to know the pipeline has actually skipped a frame.”

Faster light baking

With the faster light baking in 4.27 the turnaround time for changing the lighting of a shot has changed and this dramatically affects the workflow on set. While UE4 has always been real-time, for complex scenes the creative process could be interactive but the turnaround time to have those changes fully ready for filming would take some time. “The whole idea behind being able to use a game engine is that you want to be able to light ‘in the moment’ right then and there and see exactly what’s going on. because filmmaking is an iterative process,” says Perkins.” One of the normal aspects of VP is to use things such as global Illumination or even things that can stress the real-time further but do so without affecting the virtual performance. “We need to keep to 24 FPS and the way we do this is to allow you to move the lights interactively, but when it becomes a little bit more computationally heavy, you can bake in the moment,” says Perkins. “And what we are aiming for in 4.27 is to allow you to bake in basically the same amount of time as it would take you to bring in practical lighting.” This is in contrast to some previous experiences where teams would pre-light and bake for use the next day. Normally, the iterative process on set is not to fully relight, but to adjust one or two lights, perhaps fine-tuned to the particular take or shot that you are working on. Normally when you load a major scene, explains Perkins, you already have a good idea on the lighting, but when you are there, on the set, with the actors, you’re going to want to massage it a bit based on what is in the volume, the practical lighting, and everything integrates with the physical space.” Given the history of how sets operate, adding a light has always taken a few minutes and so Epic is aiming to make the digital lights just as easy to work with. “It could take two minutes, it could take 10 minutes, but it’s still something that is still very interactive and fits within the guidelines of a typical production,” he adds. “It’s a huge win because I have to say this has been the holy grail – the ability to do it just the same as adjusting practical lights between takes.”

GPU Lightmass significantly reduces the time it takes to generate lighting data for scenes that require global illumination, soft shadows, and other complex lighting effects that are expensive to render in real-time. Since users can see the results progressively, it’s easy to make changes and start over without waiting for the final bake, making for a more interactive workflow.

For in-camera VFX workflows, GPU Lightmass enables crews to modify virtual set lighting far more quickly than before, making productions more efficient and ensuring that creative flow is not interrupted.

Unreal Engine 4.27 offers many enhancements to GPU Lightmass, including more feature support and increased stability. The system uses the GPU rather than CPU to progressively render pre-computed lightmaps, leveraging the latest ray tracing capabilities with DirectX 12 (DX12) and Microsoft’s DXR framework.

Director of Photography Matthew Jensen agrees with the benefits of the new tools. “It’s so much easier now to be able to make the changes in real-time, see their effects, and then make adjustments from there,” he says. “It allows for a happy accident. It allows for more spontaneity in the creative process.”

OCIO

Additionally, 4.27 adds support for OpenColorIO to nDisplay, ensuring accurate color matching of Unreal Engine content to what the physical camera sees on the LED volume.

Epic’s focus in virtual production, according to Perkins has been on cinematographers. “If you step back, an LED stage is really a lighting tool. Being able to have real color control is key, whether we’re talking about per node, or being able to isolate the ceiling, because it is being seen off axis.” Now the team can color time the ceiling independent of the walls, as well as being able to carry a color profile from “the creation of the content all the way through the capture, and to post, which is absolutely critical, otherwise, things just don’t line up,” he explains.

Virtual Camera

Also in 4.27, Unreal Engine’s Virtual Camera system has been significantly enhanced, adding support for more features such as Multi-User Editing, and offering a redesigned user experience and an extensible core architecture. Live Link Vcam, a new iOS app, enables users to drive a Cine Camera in-engine using an iPad.

Process Shots & Motion Blur

There are also improvements for producing correct motion blur in traveling shots, accounting for the physical camera with a moving background. LED volumes with UE4 took off after the SIGGRAPH 2019 LA stage demo and the reaction to the work seen in season 1 of The Mandalorian. Now, two years later the industry is able to offer a set of more expanded shooting scenarios, but some producers are still considering LED volumes for more passive background replacement. “We have noticed that there are a lot of people that are thinking of VP in just one simple way, either it’s an exterior or interior – it is still a static image as opposed to something that’s more dynamic” explains Perkins. “We really focused quite a bit on changing that. And one of those use cases is process shots, or car shots. We have put in quite a bit of effort to aid that and really get, for example, the right motion blur. Render something that is more accurate for the filmmakers.”

Sample Project Download

As we had previously posted, Epic recently teamed up with filmmakers’ collective Bullitt to further prove out these tools by making a short test piece and putting the latest production workflow through its paces. Shot entirely on NantStudios’ LED stage in Los Angeles, the production test capitalized on the new workflows, discussed above, that enable dynamic lighting and background changes on-set with minimal downtime, together with multi-camera and traveling vehicle shoots.

That free sample project is now available for the community to download. UE4.27 is also now released.