PURE4D 2.0 is DI4D’s facial animation pipeline for digital doubles, and the team just released their first short film/promo showing the latest advances of their complete system.

The film stars the award-winning actor from Balder’s Gate 3, Neil Newbon and it attempts to highlight the invaluable contribution skilled actors can make to video games and other animation projects. Neil Newbon is a co-founder of the training facility Performance Captured Academy, which offers courses on motion capture for aspiring actors.

The film uses the same PURE4D 2.0 technology behind Modern Warfare II and III. While DI4D isn’t going to offer production services, everyone in the company pitched in over five months the project took to complete. The entire scene, the 3D Volumetric stage, equipment and, of course, the digital Neil Newbon were 3D, animated in Maya, and rendered in Arnold and finished at 1920×820. Ten24 provided the body scan. Otherwise, the whole project was done by about a 17 DI4D staff, who admitted to greater client empathy for their own clients, having completed a film themselves!

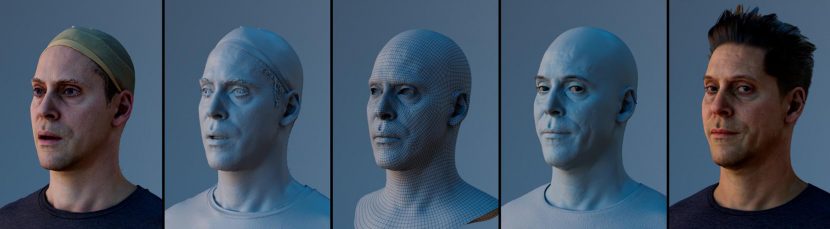

The process starts by scanning the Neil Newbon’s face on a 3D photogrammetry capture stage. This process obtains the actor’s 3D shape, providing accurate maps of their appearance, such as the details of the skin.

The scan is then wrapped with a mesh topology for animation purposes, which the client can define. They then capture the actor inside a 4D facial capture system. DI4D is hardware agnostic, allowing them to use third-party or their own 4D systems.

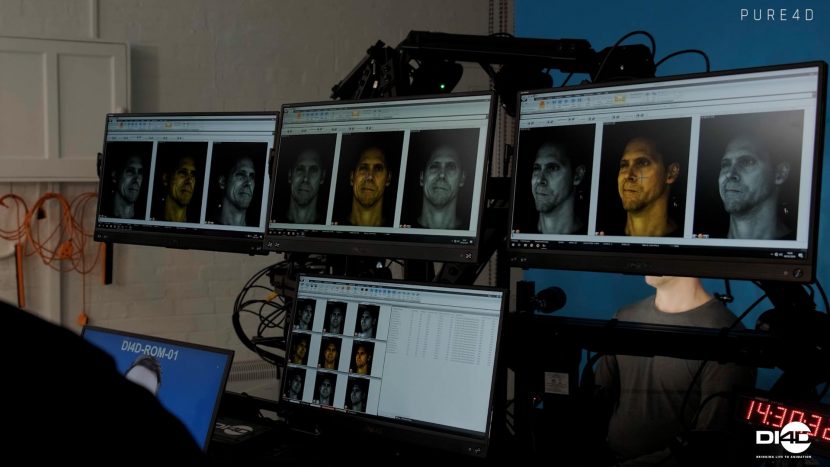

Inside the system, the actor performs phonetic pangrams and dynamic facial expressions, which provide data on the full range of the actor’s facial movements.

Then comes the performance capture which was done at PitStop Productions using DI4D’s DHMC or a high-quality third-party system, as the team captures the actor’s facial performance simultaneously with body mocap and audio.

DI4D pioneered markerless HMC technology to reduce time on set while improving accuracy. It’s nonintrusive and allows the actor and director to develop expressive and engaging scenes. Next, the mesh topology from the 3D scan is applied to the HMC data. PURE4D uses the maps to ensure correspondence between the 3D scan and the 4D data.

Multiple shapes and pangrams are then tracked and analyzed, allowing the team to form an accurate understanding of the actor’s entire facial movement. They track the facial animation for all the selective performances. This process is considerably faster using machine learning technology, which continually learns the actor’s facial expressions over time. In the case of AAA game production, it both reduces subjectivity and allows for the volume required for a game. The machine learning component only uses data from one actor, privacy and rights are closely monitored.

Finally, they combine the HMC performance with the 4D data, faithfully reproducing the actor’s performance while achieving a level of fidelity beyond traditional facial animation pipelines.