In 1999 Kim Libreri was the bullet time supervisor on the original 1999 Matrix film. Today he is the CTO of Epic Games, and he and the team have just returned to the Matrix. In a remarkable tie-in between the technical advances shown in UE5 and the new The Matrix Awakens, a new tech demo An Unreal Engine 5 Experience has been launched. The cutting-edge showcase can be downloaded for free on PlayStation5andXbox Series X/S. This boundary-pushing technical demo is an original concept piece within the world of WarnerBros’ The Matrix Awakens. The witty and clever cinematic was directed and written by Lana Wachowski. It features jaw-dropping digital versions of Keanu Reeves and Carrie-Anne Moss, with both actors signing on to be scanned and replicated to reprising their roles as Neo and Trinity, but as they are and as they were in 1999.

Ed. note: The Experience is NOT part of the film, it is a technical demo to showcase what UE5 can do, so – no spoilers to worry about!

Lana Lan Senior Character Artist commented when we sat down with the team earlier this week, “this project really represents a lot of UE5 advancements, better artistry and techniques, and new scanning technologies as far as characters go.” The goal of the Epic team was to test and push all of their new and evolving technology and really make sure that UE5 is ready for creators. “I think a lot of the stuff that we learned is going to be folded back into the tools, and we will also be releasing a lot of this as content samples next year,” she adds.

The console downloadable package is about 28 Gig and initially, it is designed to show what can be done on a domestic gaming console in real-time. Later, asset parts such as the city will be released as downloadable assets from the main Epic site and could be then explored on a desktop computer.

The project also reunited James McTeigue, Kym Barrett, John Gaeta, Jerome Platteaux, George Borshukov, and Michael F Gay, in collaboration with teams across both Epic Games and partners, such asSideFX, Evil Eye Pictures, The Coalition, WetaFX(the team formerly known as Weta Digital), and others.

Wachowski, Libreri, and Gaeta have been friends since the days of the trilogy. “When I told them I was making another Matrix film, they suggested I come and play in the Epic sandbox,” says Wachowski. “And Holy Sh*t, what a sandbox it is!“

The video is all rendered in real-time with the exception of two shots, that were pre-rendered. When Neo and Trinity multiply each other, and one wide shot of the city. This was just because the data was so vast, there would have been a short time to load the assets, which would have broken the pacing of the experience. Other than those shots, the experience renders in real-time on a standard console.

Digital Actors

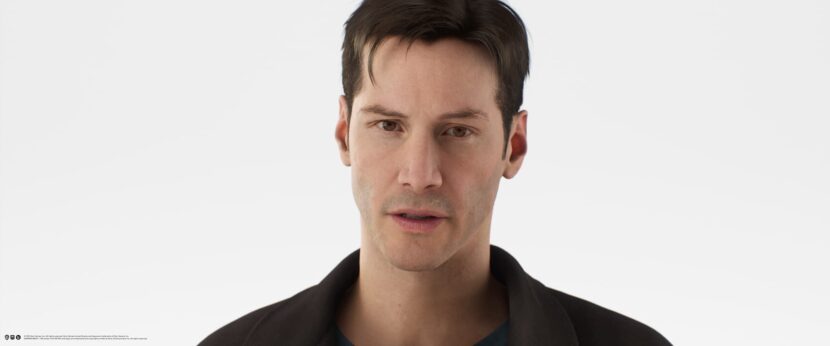

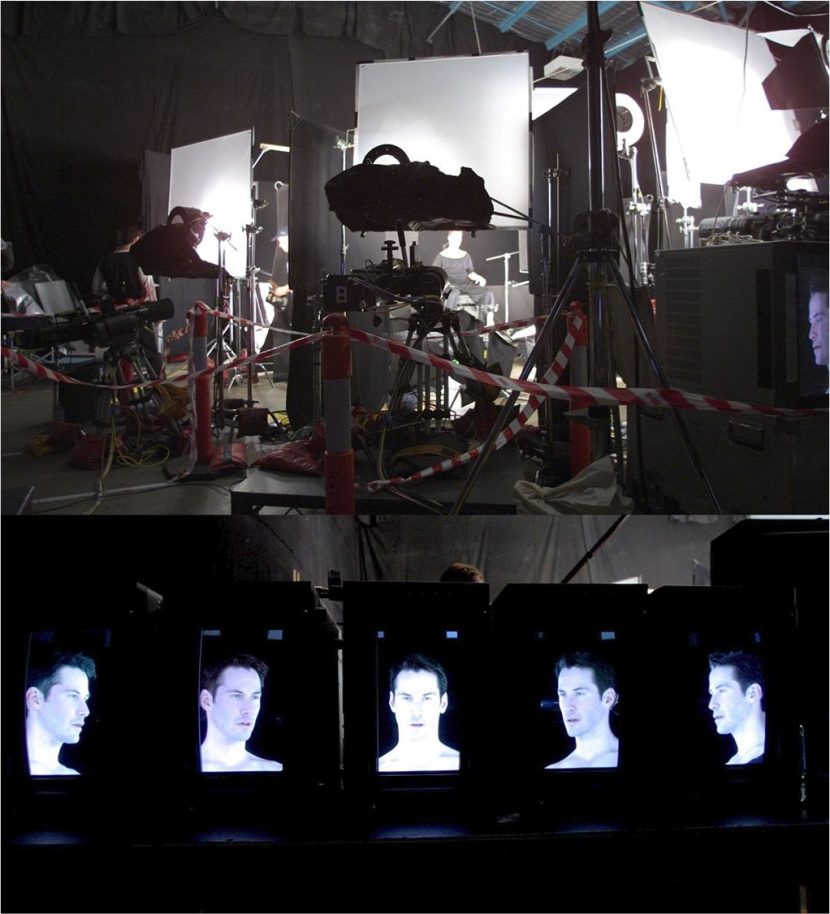

The demo features the performance and likeness of Keanu Reeves and Carrie-Anne Moss as incredibly realistic digital humans. To achieve this, Epic’s 3Lateral team captured high-fidelity 3D scans of the actor’s faces and 4D captures of their performances in their Novi Sad studio. Reeves and Moss were in Germany, so they flew to Serbia and spent a day doing facial and 4D scanning.

“This was a really tough project,” explained Pete Sumanaseni, Senior Technical Artist at Epic. “We had CG Keanu Reeves in the same shot as real Keanu, – like right next to him! You think you can cross the Uncanny Valley, but then you’re showing the real guy right next to digital him and that was tough” Judging from the incredible twitter response in the week preceding when the teaser trailer was released and people were confused if they were seeing digital Keanu or the actual actor, the Epic team succeeded.

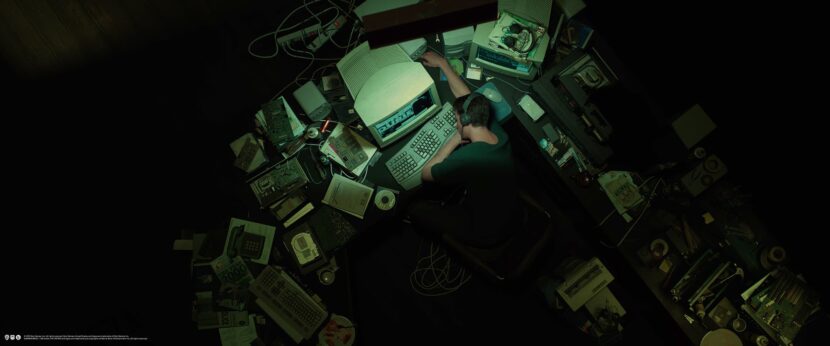

Even now people are confused, having watched the footage several times. “The first time you see the older Keanu that was a video shoot that we (Epic) filmed of him,” Pete explains. “After they come back after Morpheus that was all the CG versions. It was color corrected to match and look the same.” There is also one point where we see footage in the mirror this was real film footage that was comped in UE5. The first shot of Morpheus is also not CG, but then becomes CG when the young Thomas Anderson (Neo) appears. Even the desk scene at the beginning when Anderson is asleep was a real-time recreation of a scene from the original film.

The Epic team used a lot of different and new technologies. The 3Lateral team used a lot of different scanning solutions and scenarios. They used their mobile 4D scanner with 7 stereo pairs of cameras and this data proved particularly valuable. They also did a more classic cross-polarized scan array and HMC II rigs made by Epic’s Cubic Moton team. In particular, they used a pose-based solver which is run offline for the dialogue captured for IO. But even with the advances in scanning, the CG materials, rigs and all the background characters are current Metahuman technology. The main difference is the need for specific costumes and hair. For example, IO has a complex hair groom that is not a standard Metahuman weave. For the hair, the groom was done externally and imported. Adam who had worked on the original MeetMike project, explains how the UE hair has changed over time. “When we did Digital Mike, we used Xgen, and then imported small geometry strips into the engine. Since then, we have put a lot of efforts into making a better workflow and a better system. Now, rather than bringing in geometry strips, you can bring in splines and the hair is created from those guide spine hairs.” Once imported all the hair dynamics are generated in real-time in Engine.

All of the actor’s dialogue in the experience is their own voice, recorded when they were in Serbia. For the most part, the 3Lateral team captured each line the actors said delivered in a 4D capture array. In addition to the main script, the actors did a ROM (range of motion). There were some lines that were changed or altered and for these, the machine learning process inferred the facial motions to drive the 3Lateral rig. “But there were no lines delivered close to camera that were not captured and referenced using the new 4D capture tech,” explains Marko Brkovic, Lead Technical Animator at 3Lateral Studio.

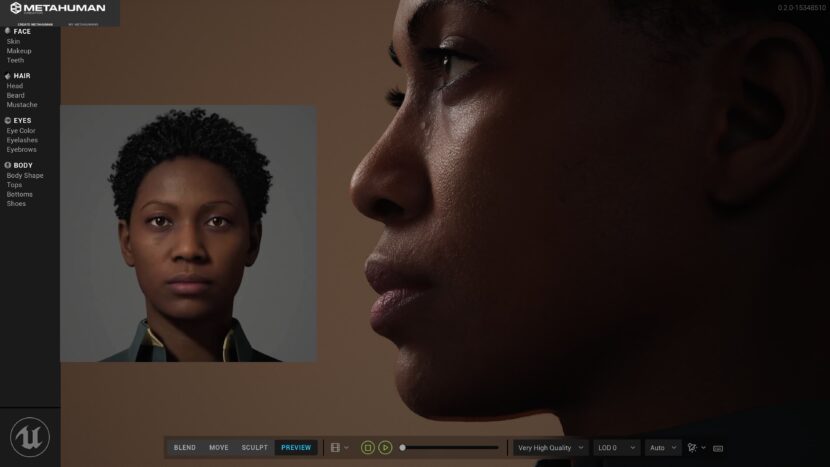

While the lead hero characters had a lot of lookdev done, they are still based on the normal 3Lateral Metahumans. “They’re still based on the same metahuman framework as all the other characters, and the system that takes care of all the LODs (levels of detail) and all the facial controls are the same across all the characters.”

The character’s eyes for example are all Metahuman eyes with some adjustments made to the base model to more accurately model the corneal bulge and the way it transitions to the sclera. The base scan is based off Epic Game’s Chris Evans eyes. “We went down a rabbit hole of ophthalmology papers, trying to figure out all the different axes of the eyeball and so on,” says Lana Lan. The team made the eye models and then they compared it against Chris’s eye scans. From there they then adjusted it to accurately represent Keanu and Carrie-Ann’s eyes.

Deaging Keanu was one of the more challenging things to achieve. Adam Skutt, Senior Character Artist at Epic Games. When Keanu visited 3Lateral to be scanned he had finished filming with the main unit and he was clean-shaven. But de-aging the 57-year-old actor was complex as there was no perfect reference from 1999, there was a plaster scan but the expression was not ideal. “We didn’t have a perfect neutral pose and to add on top of that one of the references was from the 1999 ‘universal capture'” comments Adam. Back on the original Matrix films, the key actors were filmed with a set of HD cameras side-mounted (9:16). But due to the ISO on the Sony F900s then the multi-camera performance capture used very strong lighting. “He was under extremely hot, bright lights.so I think he was squinting and then there was the outdoor reference but then he had sunglasses on.”

For the wardrobe, the team had an actual Trinity suit made in real life and fitted onto a model. She was then scanned and referenced. The digital clothes were led by Adam Skutt. “We also had a bunch of archives from Warner Bros. We had a reference from the original film of Carrie Ann Moss. With this reference, we made her outfit in Marvelous Designer.”

MetaHuman Creator

The open-world city environment includes hero character IO, who was one of two launch characters for MetaHuman Creator when it was first released.

The team had a CPU, Graphics and performance budget when making the Experience. For example, when the CG characters are on white, there is no background and so that “allowed me to use more Ray-traced area lights,” Pete recalls. “Whereas when we got into the car, we had to budget for the complex background, so I had to use less lights.” When the project first came together it only ran at 15 fps but as things were optimized the frame rate rapidly shot up. “But when we started everything was way over budget, we used a lot of 4K textures, a lot of geometry, Lumen, – which had a cost of its own, a new shadow maps technique for the sunlight – originally we were basically pushing the limits of everything.” The final experience however is indicative of what is possible for a game, running on a PS5 or XBox Series X/S there is still a lot of CPU headroom that would allow a game designer to run the normal mechanics of a game. The team did not want to build a demo that was unrealistic for what a new AAA game would be able to achieve.

World Building

Where the sample project Valley of the Ancient gave a glimpse at some of the new technology available in UE5, The Matrix Awakens: An Unreal Engine5 Experience goes a step further as it is an interactive demo game and experience running in real-time that anyone can download immediately. The experience consists of cut scenes, a first shooter demo section and then at the end the user is left with a whole city they can explore.

Many of the action scenes in the demo originated with crew members driving cars around the city to capture exciting shots. The team was able to use the simulated universe to author cinematic content, like live-action moviemakers scouting a city to find the best streets to tell their story.

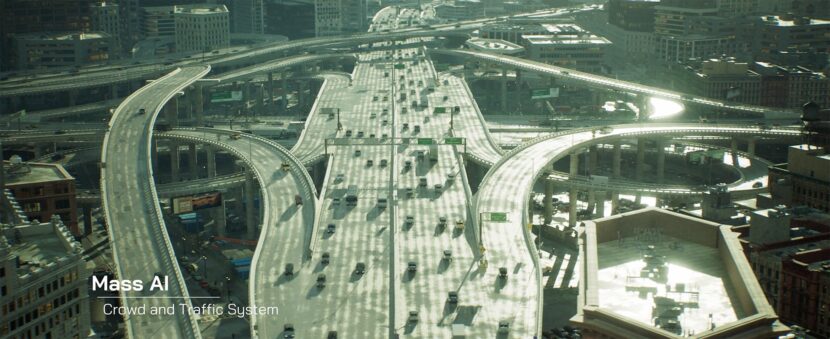

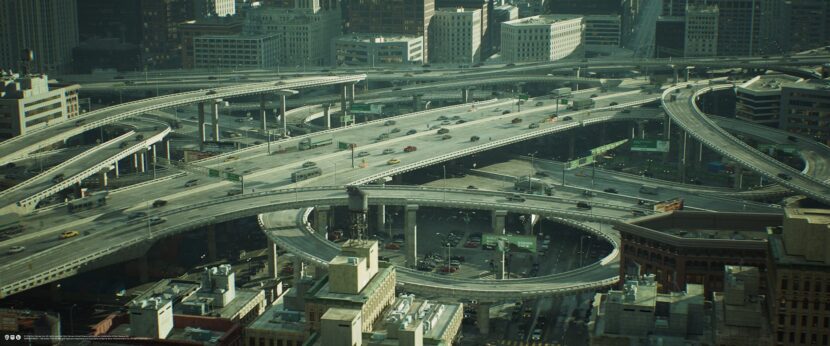

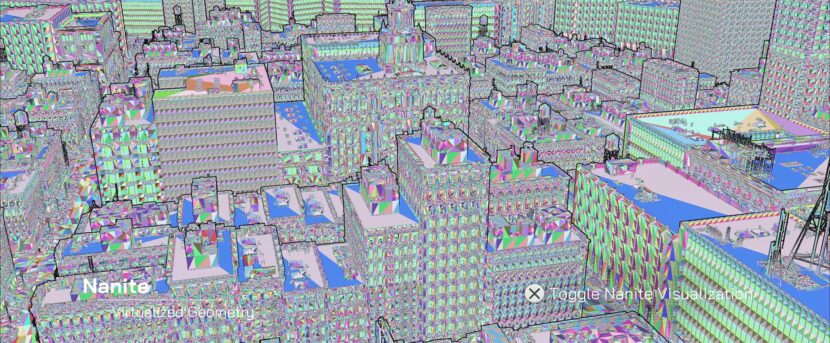

The technical demo also puts previously showcased UE5 features Nanite and Lumen on display. After the interactive chase sequence, users can roam the dense, open-world city environment using UE5’s virtualized micro polygon geometry system which provides a massive seven thousand buildings made of thousands of modular pieces, 45,073 parked cars (of which 38,146 are drivable), over 260 km of roads, 512 km of the sidewalk, 1,248 intersections, 27,848 lamp posts, and 12,422 manholes in a virtual world that is slightly larger than the real downtown LA. Additionally, as one drives or walks around the city, you can pass 35,000 metahumans going about their everyday business. “There’s certainly a proximity thing where the ones nearest to us are actual metahumans and then out in the distance, they are actually vertex animated static meshes that are generated from the metahumans,” explains Lana Lan.

The buildings are remarkably detailed as they are made of billions of triangles in Nanite and not normal maps. The buildings have all that detail was built in, a user can walk up to any of the buildings and see that they are fully detailed. “There were modelled all that is in the geometry, it’s not a normal map. It’s high-resolution models,” says Lana. The average polygon count was 7000k, buildings are made of 1000’s of assets and each asset could be up to millions of polygons, so we have several billions of polygons to make up just the buildings of the city.

There are easter eggs to be found in the city, from numberplates to various shops for all the key developers, Lana has a jewellery shop and Pete, but he has forgotten what it sells! After all the city is 4,138 km wide and 4.968 km long – so it is easy to get lost.

Lighting

Unreal Engine 5’s fully dynamic global illumination system Lumen leverages real-time ray tracing to deliver realistic lighting and reflections throughout the interactive parts of the demo. Real-time ray tracing is also used for the cinematic element to generate the beautiful, realistic soft shadows of the characters. The entire world is lit by only the sun, sky and emissive materials on meshes. No light sources were placed for the tens of thousands of streetlights and headlights. In night mode, nearly all lighting comes from the millions of emissive building windows.

The project was huge, it was started over a year ago and involved somewhere between 60 and 100 artists, many working remotely. The Matrix Awakens: An Unreal Engine 5 Experience is not a game, nor will it be released as a game in the future, but this tech demo offers a vision for what the future of interactive content could look like and it sets the bar for the state-of-the-art console visuals.

“Keanu, Carrie, and I had a blast making this demo. The Epic sandbox is pretty special because they love experimenting and dreaming big. Whatever the future of cinematic storytelling, Epic will play no small part in its evolution.”

The new experience takes place in a vast and explorable open-world city that is rich and complex. Sixteen kilometers square photoreal, and quickly traversable, it’s populated with realistic inhabitants and traffic.